February 26, 2021

Choosing Your Next Data Center Fabric: Cisco’s ACI or EVPN, Part 2

A technical comparison between Cisco’s fabric options: Application Centric Infrastructure and Ethernet VPN.

Cisco customers have a choice between Application Centric Infrastructure (ACI) and Ethernet VPN (EVPN) for building their data center network fabrics. Since these technologies are thought to be interchangeable, the choice often falls to cultural, political, or other “Layer 8” decision-making. But the fabric technologies are, in fact, different in ways both subtle and significant, and one’s choice has a profound impact on fabric orchestration, operations, and staffing.

Learn how CDW services and solutions can help you effectively deploy network solutions from Cisco.

I hope you’ll join me all the way through this three-part blog series as I compare and contrast these technologies and provide a foundation for deciding on the best choice for your organization’s needs.

Physical Topologies for ACI and EVPN

ACI is very prescriptive. Each “pod” or data center must follow the spine/leaf/border leaf topology described in Part 1 of this blog series, or a multi-tier topology. Northbound links must connect to the border leaves. Fabric links must be 40 Gbps or faster. All leaves must connect to all spines. Leaves never connect to each other. East/west links between ACI pods or sites must be routed subinterfaces that connect to spine switches. And so on.

Such edicts sound onerous, but they are key to ACI’s benefits:

- New leaves, spines and APICs are added to the fabric automatically and securely.

- There is clear separation of underlay and overlay cabling.

- Cabling mistakes are immediately flagged.

- The only human config needed is to assign a node number to each device; all else is automatic.

- Verified scalability of 400 leaves + six spines in one pod, and more with multi-pod and multi-site.

- There is no ambiguity about how to add ports, upgrades software, increases bandwidth, etc.

- The fabric is self-healing with sub-second convergence times (~200 milliseconds).

In contrast, EVPN is not at all prescriptive. The Data Center Network Manager (DCNM) fabric manager strongly encourages the use of a spine/leaf topology, but there’s nothing to prevent someone from “going rogue” and deploying EVPN across Campus, WAN, MAN and other ad-hoc topologies.

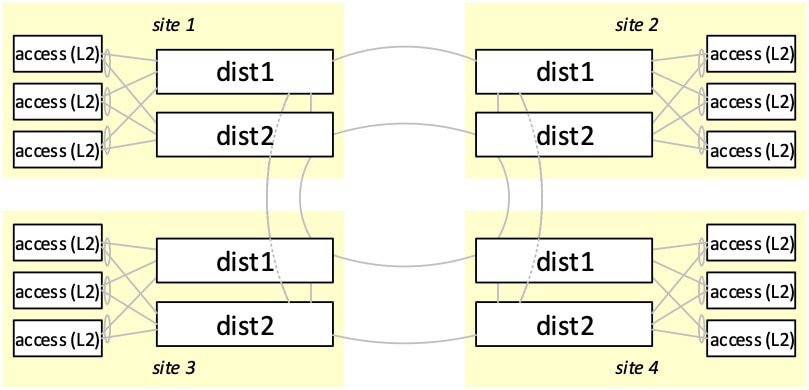

For example, consider a four-site fiber ring with site-local VLANs:

By adding EVPN to the eight “dist” switches that form the ring,

- VLANs can span sites with optimal routing and no changes to the access layer. (Alternatives of ACI, OTV, VPLS or SD-Access all cost much more, and L2 trunking is a nightmare.)

- L3 VRF separation can extend between sites. (Alternatives of MPLS and GRE are costly, and VRF‑Lite is labor intensive.)

- The overlay can be encrypted with MACSec or CloudSec. (Alternative IPSec costs more and isn’t wire-rate.)

As attractive as EVPN seems to be, the flip side is that operating EVPN in such a topology can be challenging, as discussed in the following section of this blog.

Logical Configurations for ACI and EVPN

With EVPN, the administrator is 100 percent responsible for understanding the underlay configuration1, which requires CCNP or CCIE-level EVPN knowledge about BGP route types, VXLAN, IRB, VRF, underlay/overlay separation, etc. In many ways, EVPN’s L2 forwarding is an even bigger minefield than spanning tree ever was. Anecdotally, many organizations who manage their EVPN networks the same way they managed their old networks struggle with outages and L2 problems due to human error and lack of understanding. A test lab is critical. Most organizations will also benefit from disciplined use of an orchestration tool like DCNM and/or Ansible to drive consistency and avoid loose-cannon engineering mistakes.

With ACI, APIC automatically handles the underlay. ACI administrators are welcome to explore and learn the ACI guts, but they don’t need to. Instead, the administrator is left to focus on the overlay, which is divided into physical leaf/port-specific details (for example, link speeds, breakout, CDP, LLDP, and LACP) and logical fabric-wide objects (for example, tenants/VRFs, Layer 3, Layer 2, and contracts). This physical/logical decoupling challenges new ACI users as they see simple tasks, like configuring Open Shortest Path First (OSPF) on a VLAN, become monumental exercises. However, the value of decoupling becomes crystal clear when one deploys ACI’s Virtual Machine Manager (VMM) integration with vSphere, NSX, KVM, Hyper-V, Kubernetes, OpenShift, etc., a benefit discussed more with APIs in the next section of this blog.

1. Cisco NX/OS VXLAN BGP EVPN documentation has two configuration examples worth reviewing.

As far as the logical overlay configuration, ACI is best thought of as a data center access fabric. It supports all the mainstream networking one would expect for hosting servers, hypervisors, virtual machines, IP storage, etc. ACI excels at multitenancy. It also includes value-add extras like VMM, security contracts, policy-based redirect (PBR) for firewall/load-balancer insertion, and Fibre Channel and Fibre Channel over Ethernet (FC/FCoE) SAN.

However, ACI is not a great network core. For example, L3 routing on ACI (static/OSPF/BGP/EIGRP) requires extra care because of how L3Outs, route learning/redistribution and security contracts interact. Also, L3 routing configuration and monitoring in APIC is very different from the IOS-XE/XR and NXOS CLI’s the operations teams are familiar with. This explains why many ACI customers prefer to keep core and transit routing outside the fabric.

EVPN, on the other hand, is just one of a thousand NX/OS features that can be configured together by a competent administrator mindful of feature interoperability. One enterprise might deploy EVPN purely as a data center access fabric, mirroring ACI. Another might do its core and transit routing on the EVPN border leaves. Another might do it on the EVPN spines.

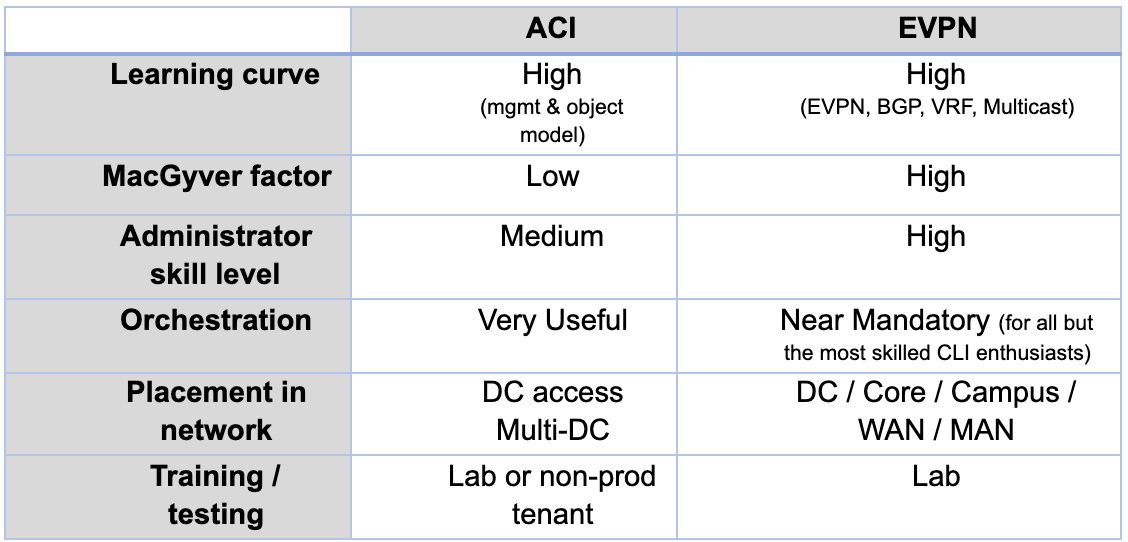

To summarize the logical configurations:

Application Programming Interfaces (API) for ACI and EVPN

Traditional network devices like Cisco NX/OS and Arista EOS have per-device configurations, where an imperative API can automate anything on a device-by-device basis that an engineer might configure by hand. For example, the logic of adding new network 10.64.8.1/24 might look like this:

- On switches 11, 12, 13 and 14

- Create anycast SVI 20 with subnet 10.64.8.1/24

- Create VLAN 20 with name “Apps”

- Trunk VLAN 20 on interfaces 13-17 (switches 11-12) and 32-39 (switches 13-14)

- Bind VLAN 20 to VNID 9472 on the VPC anycast VXLAN Tunnel Endpoint (VTEP)

- On vSphere vCenter

- Add VDS port-group “Apps” with VLAN 20

Fabric managers like DCNM or Arista CVP and orchestration systems like Ansible + YANG can abstract that logic, but only in the hands of an engineer skilled in both network and automation. Teams new to orchestration often struggle – the first few modeling attempts rarely deliver the expected flexibility and will hamstring the network if moved into production too eagerly. Mature orchestration skills only come with patience, repetition and testing.

Declarative APIs

ACI instead uses a centralized object model with a declarative API, all handled by its APIC controllers. With this, the above logic could become:

- Create subnet 10.64.8.1/24 named “Apps”

ACI abstracts away the underlay (VTEP’s, VNID’s, anycast SVI’s or VPC anycast VTEPs) and VMM abstracts away the physical topology (switches, interfaces) and hypervisor internals (VLAN, VDS). The result is an API call simple enough to give to an app owner or trust to be called from an external orchestrator like Terraform, Consul or ServiceNow. Even within a network team’s own Ansible, it’s much easier to maintain a single logical description of the fabric than to have Ansible generate logical and physical networking for many switches.

The object-model and API are, hands down, ACI’s most powerful feature, and this should be the number one criteria of any enterprise whose primary goal is to automate and orchestrate its infrastructure. Public clouds exclusively use such API’s, and VMware’s NSX-T recently adopted the same approach.

What Are Others Doing for Data Center Network Automation?

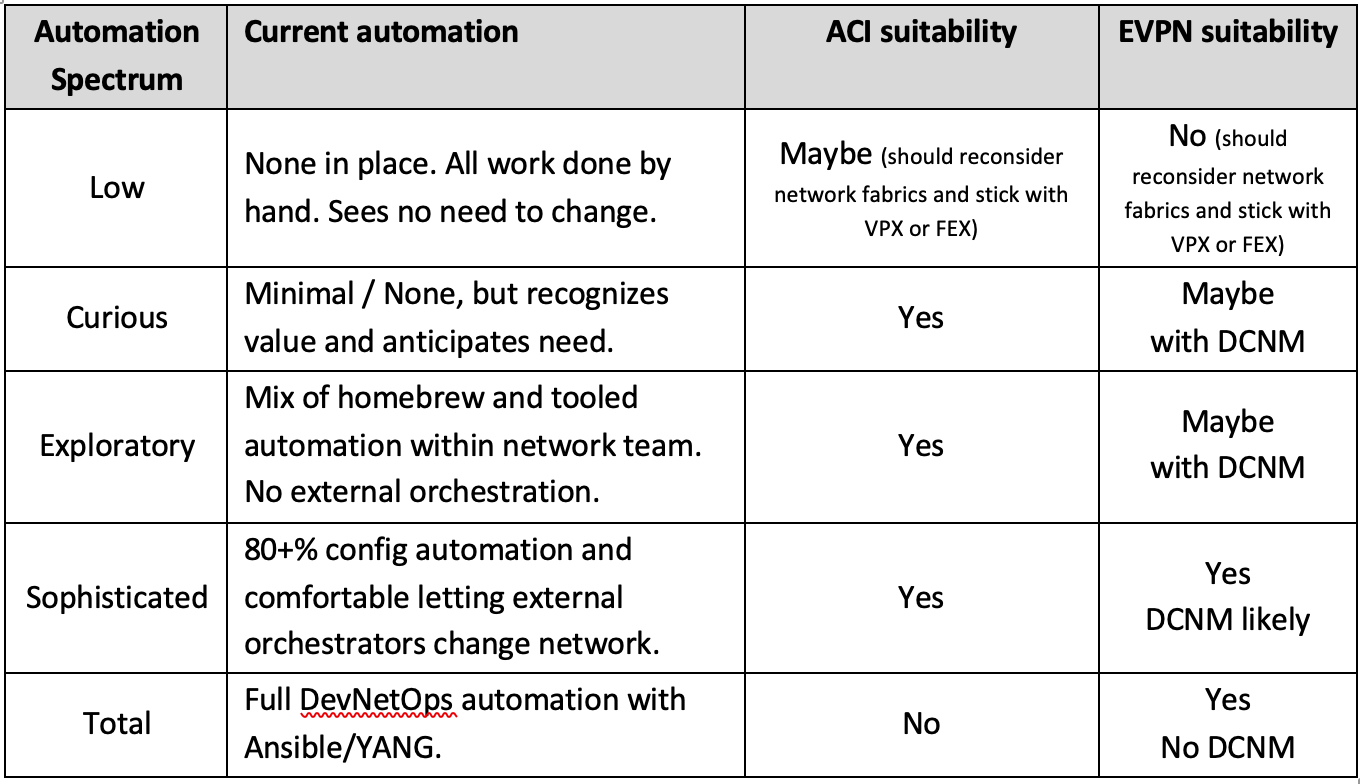

Stories of automation wizardry can be beguiling, but are often irrelevant depending on where an organization falls on the automation spectrum:

Most enterprises are “Curious” or “Exploratory” and it can take years to mature to “Sophisticated,” especially for larger teams. However, some are primed for such a move, and the deployment of a new network fabric may be the perfect catalyst to drive that shift.

In contrast, some enterprises have teams that are very comfortable with the CLI and see no need for new layers of automation. CDW feels that most of these organizations would be best served by sticking with a traditional VPC or FEX-based architecture, but recognizes that some do have disciplined and skilled staff who can deploy and operate EVPN successfully.

Blog Series Links

This blog series begins in Choosing Your Next Data Center Fabric: Cisco’s ACI or EVPN, Part 1 where I explain the high level differences between these two technologies, and then concludes in Choosing Your Next Data Center Fabric: Cisco’s ACI or EVPN, Part 3, where I will be focusing on what differentiates these two technologies to help you decide which one is right for you.